Self hosted Gen Al

Easily import open source LLMs, and other generative Al models, into Zerve to run in your own secure environment. No more leaking data and sending your prompts to third party services.

Fine-tune LLMs and enhance your prompts using RAG seamlessly

Self hosted Gen Al

Easily import open source LLMs, and other generative Al models, into Zerve to run in your own secure environment. No more leaking data and sending your prompts to third party services.

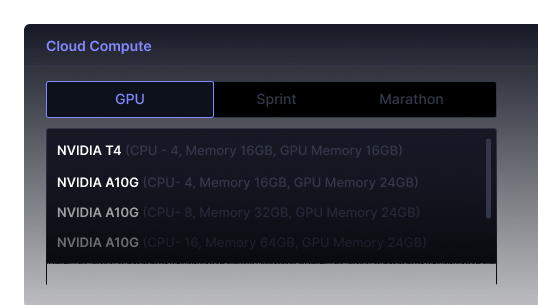

GPU Infrastructure

Zerve's underlying serverless architecture enables seamless granular configuration of GPUs, meaning you only use GPUs where vou specifically need them and they are only spinning when required.

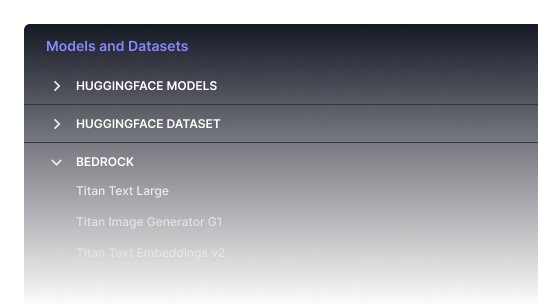

Hugging Face & Bedrock

Import and fine-tune the latest Gen AI datasets and models directly from Hugging Face and Bedrock.

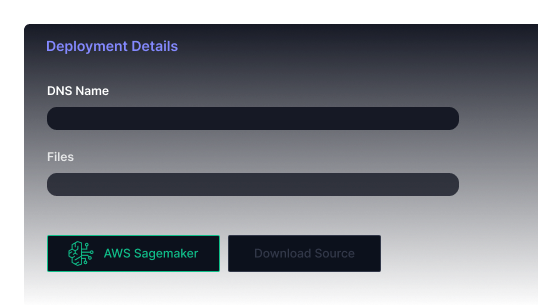

Deployment

Deploy your model to Sagemaker to use GPU at inference runtime.

Your platform for importing, fine-tuning and deploying Gen AI in the Enterprise